It’s a peculiar truth that we don’t understand how large language models (LLMs) actually work. We designed them. We built them. We trained them. But their inner workings are largely mysterious. Well, they were. That’s less true now thanks to some new research by Anthropic that was inspired by brain-scanning techniques and helps to explain why chatbots hallucinate and are terrible with numbers.

The problem is that while we understand how to design and build a model, we don’t know how all the zillions of weights and parameters, the relationships between data inside the model that result from the training process, actually give rise to what appears to be cogent outputs.

“Open up a large language model and all you will see is billions of numbers—the parameters,” says Joshua Batson, a research scientist at Anthropic (via MIT Technology Review), of what you will find if you peer inside the black box that is a fully trained AI model. “It’s not illuminating,” he notes.

To understand what’s actually happening, Anthropic’s researchers developed a new technique, called circuit tracing, to track the decision-making processes inside a large language model step-by-step. They then applied it to their own Claude 3.5 Haiku LLM.

Anthropic says its approach was inspired by the brain scanning techniques used in neuroscience and can identify components of the model that are active at different times. In other words, it’s a little like a brain scanner spotting which parts of the brain are firing during a cognitive process.

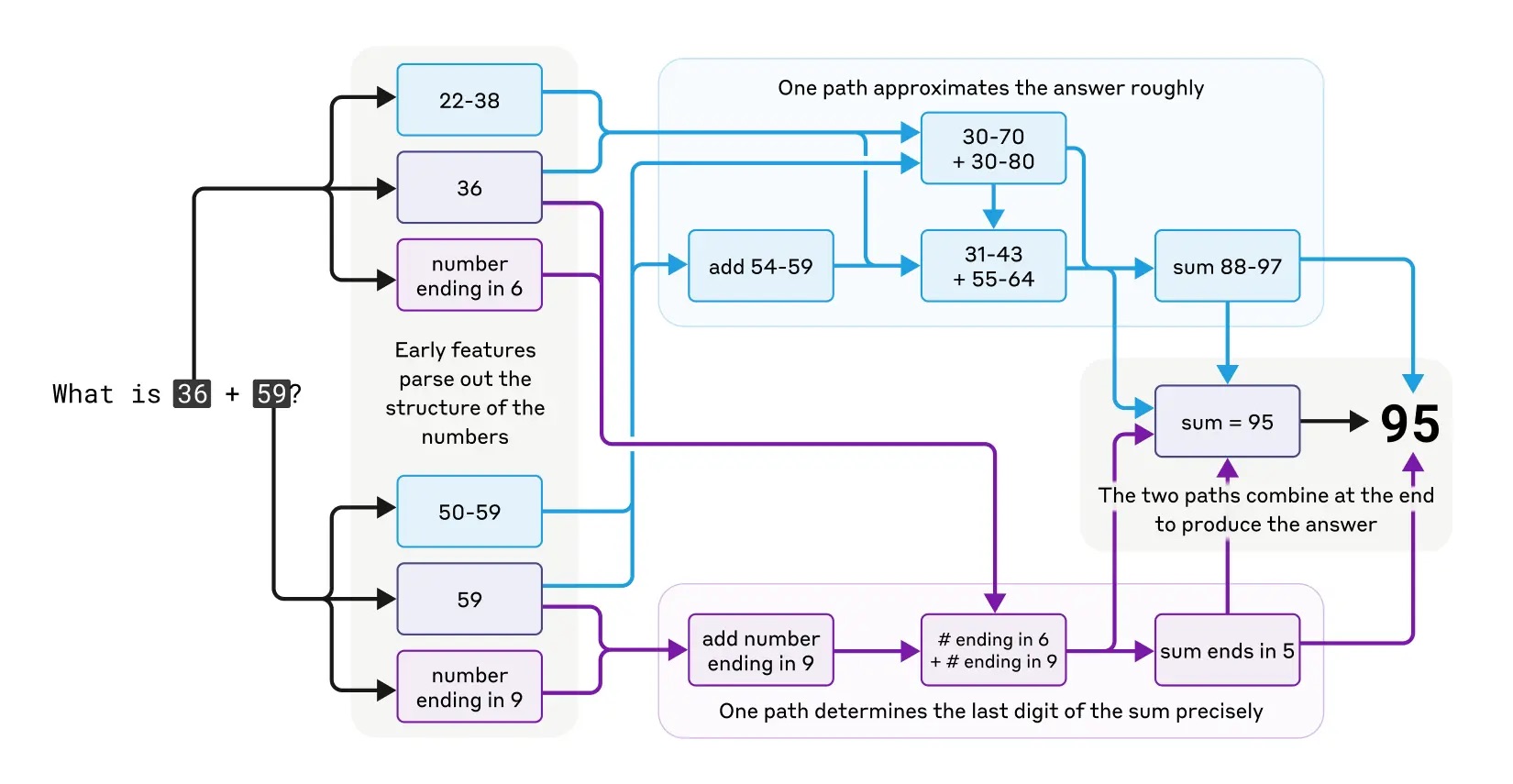

Anthropic made lots of intriguing discoveries using this approach, not least of which is why LLMs are so terrible at basic mathematics. “Ask Claude to add 36 and 59 and the model will go through a series of odd steps, including first adding a selection of approximate values (add 40ish and 60ish, add 57ish and 36ish). Towards the end of its process, it comes up with the value 92ish. Meanwhile, another sequence of steps focuses on the last digits, 6 and 9, and determines that the answer must end in a 5. Putting that together with 92ish gives the correct answer of 95,” the MIT article explains.

But here’s the really funky bit. If you ask Claude how it got the correct answer of 95, it will apparently tell you, “I added the ones (6+9=15), carried the 1, then added the 10s (3+5+1=9), resulting in 95.” But that actually only reflects common answers in its training data as to how the sum might be completed, as opposed to what it actually did.

In other words, not only does the model use a very, very odd method to do the maths, you can’t trust its explanations as to what it has just done. That’s significant and shows that model outputs can not be relied upon when designing guardrails for AI. Their internal workings need to be understood, too.

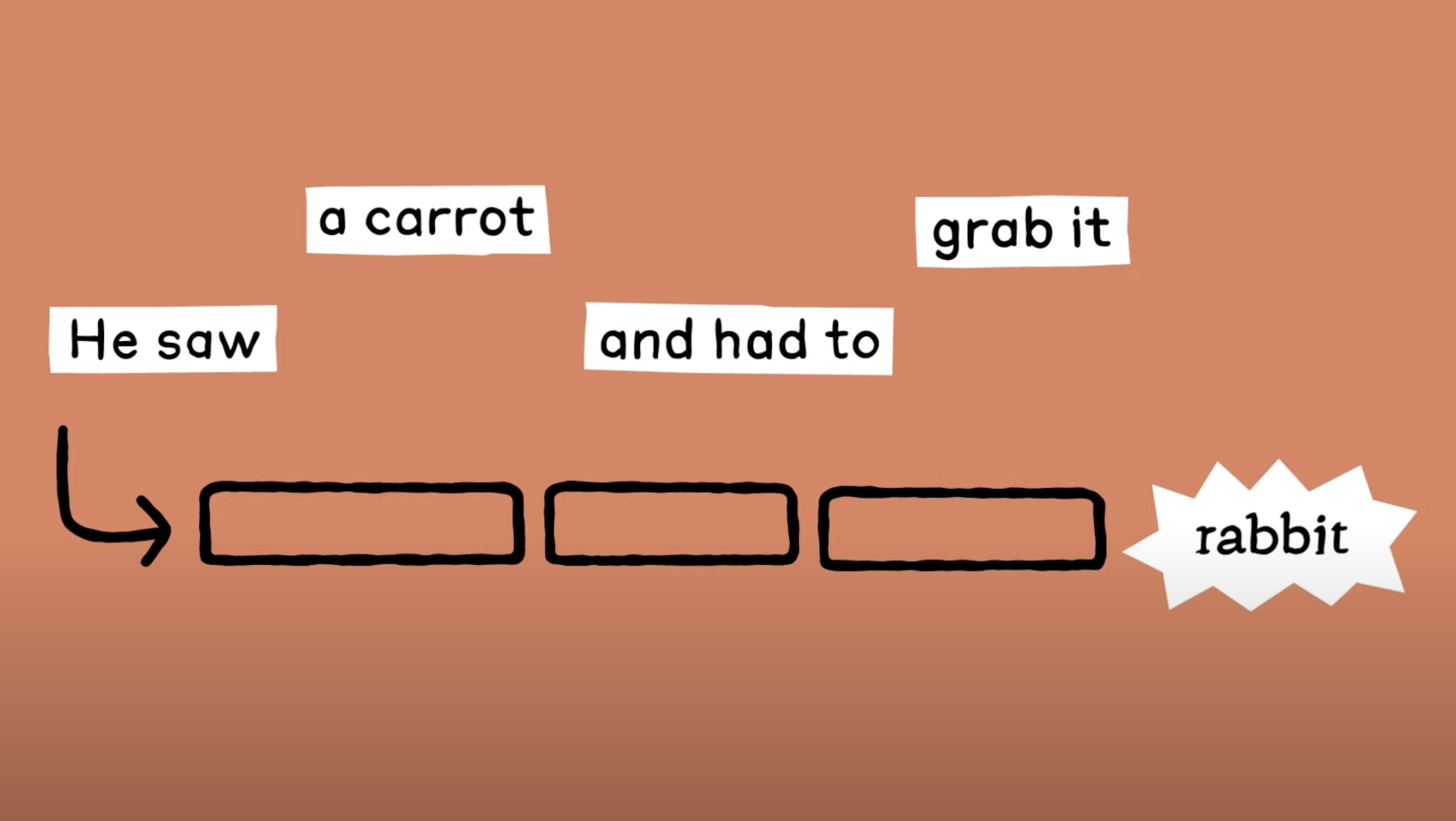

Another very surprising outcome of the research is the discovery that these LLMs do not, as is widely assumed, operate by merely predicting the next word. By tracing how Claude generated rhyming couplets, Anthropic found that it chose the rhyming word at the end of verses first, then filled in the rest of the line.

“The planning thing in poems blew me away,” says Batson. “Instead of at the very last minute trying to make the rhyme make sense, it knows where it’s going.”

Anthropic also found, among other things, that Claude “sometimes thinks in a conceptual space that is shared between languages, suggesting it has a kind of universal ‘language of thought’.”

Anywho, there’s apparently a long way to go with this research. According to Anthropic, “it currently takes a few hours of human effort to understand the circuits we see, even on prompts with only tens of words.” And the research doesn’t explain how the structures inside LLMs are formed in the first place.

But it has shone a light on at least some parts of how these oddly mysterious AI beings—which we have created but don’t understand—actually work. And that has to be a good thing.

Source link

Add comment